Next: Comparison of overlay construction

Up: Performance Evaluation

Previous: Notations and performance metrics

In this section we present and discuss our simulation results.

Client rejection probability.

We first place the server in the transit domain (shaded node in the

center of Fig. 3).

We assume that the threshold of P2Cast is

10% of the video length, and every client has sufficient storage space to

cache the patch. As for IP multicast-based patching,

we use the optimal threshold, which

is

Client rejection probability.

We first place the server in the transit domain (shaded node in the

center of Fig. 3).

We assume that the threshold of P2Cast is

10% of the video length, and every client has sufficient storage space to

cache the patch. As for IP multicast-based patching,

we use the optimal threshold, which

is

as derived in

[2].

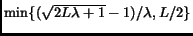

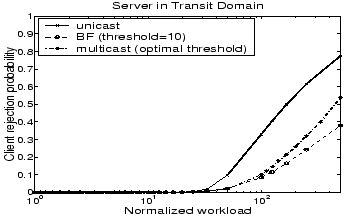

Fig. 4 depicts the

rejection probability vs. the normalized workload for unicast,

P2Cast using BF algorithm, and IP multicast-based patching.

We observe that

P2Cast admits more clients than unicast by a significant margin as

the load increases.

Also P2Cast outperforms the IP multicast-based patching,

especially when the workload is high.

It shows that the peer-to-peer paradigm employed in

P2Cast helps to improve the scalability of P2Cast.

as derived in

[2].

Fig. 4 depicts the

rejection probability vs. the normalized workload for unicast,

P2Cast using BF algorithm, and IP multicast-based patching.

We observe that

P2Cast admits more clients than unicast by a significant margin as

the load increases.

Also P2Cast outperforms the IP multicast-based patching,

especially when the workload is high.

It shows that the peer-to-peer paradigm employed in

P2Cast helps to improve the scalability of P2Cast.

Figure 4:

Rejection

probability comparison for P2Cast, unicast, and IP multicast

patching

|

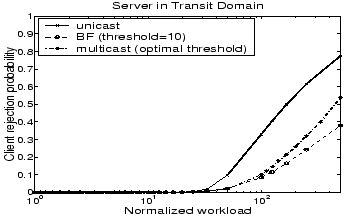

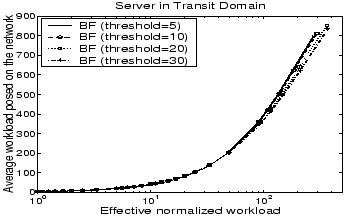

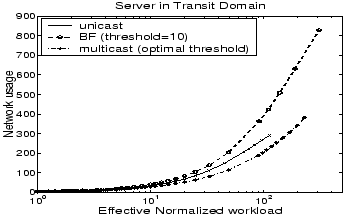

Average workload placed on network (network usage).

Since some clients are rejected by the server due to bandwidth

constraints, we use effective normalized workload to represent the

actual workload presented by the clients.

Average workload placed on network (network usage).

Since some clients are rejected by the server due to bandwidth

constraints, we use effective normalized workload to represent the

actual workload presented by the clients.

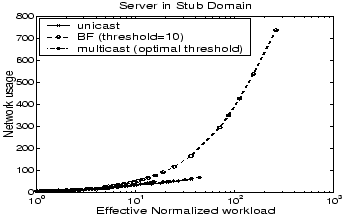

Figure 5:

Comparison of average

workload posed on the network for P2Cast, unicast, and IP multicast

patching

|

Fig. 5

illustrates the network usage vs. the

effective normalized workload. Since application-level multicast is

not as efficient as native IP multicast, P2Cast places more

workload on the network than IP multicast-based

patching. Interestingly, we find that P2Cast has higher network

usage than the unicast service approach. In [11] we plot the

average hop count from the admitted clients to the server for both

P2Cast and unicast. The

average hop count in unicast tends to be much smaller than that in

P2Cast,

suggesting that a unicast-based service

selectively admits clients that are closer to the

server.

Intuitively, clients closer to the server are more likely to have

sufficient bandwidth along the path.

This may explain why the network usage of a unicast

service is lower than that of P2Cast.

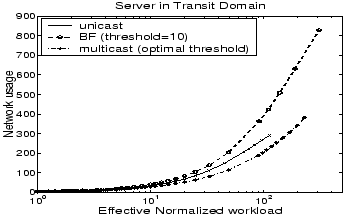

Server stress.

Fig. 6 shows the server stress for different

schemes.

The unicast server is most stressed.

The server stress for

P2Cast is even lower than that of IP multicast-based

patching. Although the application-level multicast is not as

efficient as native IP multicast, the P2P paradigm employed in

P2Cast can effectively alleviate the workload placed at the server -

in P2Cast, clients take the

responsibility off the server to serve the patch whenever possible.

Server stress.

Fig. 6 shows the server stress for different

schemes.

The unicast server is most stressed.

The server stress for

P2Cast is even lower than that of IP multicast-based

patching. Although the application-level multicast is not as

efficient as native IP multicast, the P2P paradigm employed in

P2Cast can effectively alleviate the workload placed at the server -

in P2Cast, clients take the

responsibility off the server to serve the patch whenever possible.

Figure 6:

Server stress

comparison for P2Cast, unicast, and IP multicast

patching

|

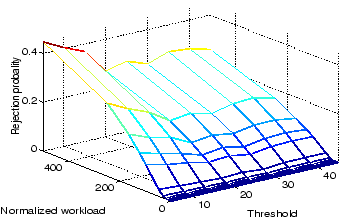

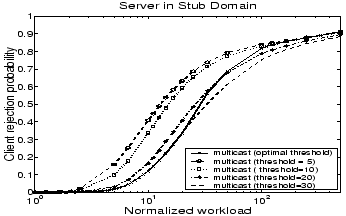

Threshold impact on the performance of P2Cast.

In general the scalability of P2Cast improves as the threshold

increases.

Fig. 7 depicts the rejection probability of the

P2Cast scheme with different thresholds. As the threshold

increases, more clients can be admitted. Intuitively as the threshold

increases, more clients arrive during a session and thus more clients

can share the base stream. However, the requirement on the storage

space placed on each client also increases. We also observe in our experiment that the

rejection probability decreases faster when the threshold is smaller than

20% of the video length, and flattens out afterwards. This suggests

that we

should carefully choose the threshold such that the most benefits can be

obtained without overburdening the client.

Fig. 8 shows that the difference in the average workload imposed on the network is marginal

as a function of threshold in P2Cast.

Threshold impact on the performance of P2Cast.

In general the scalability of P2Cast improves as the threshold

increases.

Fig. 7 depicts the rejection probability of the

P2Cast scheme with different thresholds. As the threshold

increases, more clients can be admitted. Intuitively as the threshold

increases, more clients arrive during a session and thus more clients

can share the base stream. However, the requirement on the storage

space placed on each client also increases. We also observe in our experiment that the

rejection probability decreases faster when the threshold is smaller than

20% of the video length, and flattens out afterwards. This suggests

that we

should carefully choose the threshold such that the most benefits can be

obtained without overburdening the client.

Fig. 8 shows that the difference in the average workload imposed on the network is marginal

as a function of threshold in P2Cast.

Figure 7:

Threshold impact on

client rejection probability

|

Figure 8:

Threshold impact on

average workload posed on the network

|

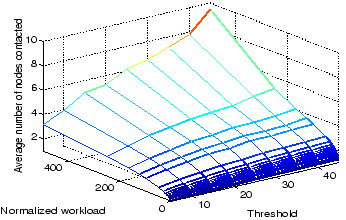

Figure 9:

Threshold impact on startup delay

|

In VoD service, the startup delay is another important metric.

In the BF algorithm illustrated in Section 3, every step incurs

certain delay. However we expect that the available bandwidth

measurement conducted at Step 2 is the most time consuming.

Therefore the startup delay

in P2Cast heavily depends on the number of candidate clients that a

new client has to contact before being admitted.

We use the average number of candidate nodes a new client has to contact before being

admitted as an estimate of the startup delay.

Fig. 9 depicts the average number of

nodes needs to be contacted for different threshold in P2Cast. As

either the normalized workload or the threshold value increases,

the average number of nodes that need to be contacted also increases.

Therefore although a larger threshold in P2Cast allows more

clients to be served, it also leads to a larger joining delay.

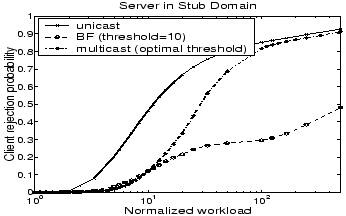

Effect of server bandwidth.

We investigate the effect of server bandwidth on the performance by

moving the server to one of the stub nodes in

Fig. 3 (a shaded node in the stub domain). Here the server

has less bandwidth than being placed in the transit

domain. Fig. 10 and Fig. 11

illustrate the rejection probability and average workload placed on

the network. Overall, the rejection probability of all three

approaches with the server at the stub domain

is higher than that with the server in the transit domain (see

Fig. 4) due to the decreased server bandwidth.

As to the average

workload on network, P2Cast again generates the most

workload.

Similarly, the admitted clients in unicast

tend to be closer to the server and this trend is even more evident

than with server in transit domain [11].

Effect of server bandwidth.

We investigate the effect of server bandwidth on the performance by

moving the server to one of the stub nodes in

Fig. 3 (a shaded node in the stub domain). Here the server

has less bandwidth than being placed in the transit

domain. Fig. 10 and Fig. 11

illustrate the rejection probability and average workload placed on

the network. Overall, the rejection probability of all three

approaches with the server at the stub domain

is higher than that with the server in the transit domain (see

Fig. 4) due to the decreased server bandwidth.

As to the average

workload on network, P2Cast again generates the most

workload.

Similarly, the admitted clients in unicast

tend to be closer to the server and this trend is even more evident

than with server in transit domain [11].

Figure 10:

Client rejection

probability vs. normalized workload

|

Figure 11:

Network usage vs.

effective normalized workload

|

Figure 12:

Client rejection

probability of IP multicast patching

|

Fig. 12 depicts the rejection probability of the IP

multicast-based patching with the optimal threshold and several fixed

thresholds. Interestingly,

we observe that the IP multicast-based patching with the optimal threshold

performs badly when the normalized workload is high.

The optimal threshold is derived to minimize the workload placed on the

server, with the assumption that the server and the network

have unlimited bandwidth to support the streaming service.

As the client arrival rate increases, the

optimal threshold decreases. This leads to an increasing number of

sessions. Since one multicast channel is required for each session,

with limited server bandwidth, the server cannot support

a large number of sessions while providing patch service. This leads to the

high rejection probability when the client arrival rate is

high. Fig. 12 compares the IP multicast based

patching using the optimal threshold with that using the fixed thresholds of 5, 10, 20, 30

percent of the video size. When the arrival rate is

high, the fixed larger threshold actually helps the IP multicast patching to

reduce the rejection rate. This suggests that ``optimal threshold''

may not be optimal in a real network setting.

Next: Comparison of overlay construction

Up: Performance Evaluation

Previous: Notations and performance metrics

Yang Guo

2003-03-27

![]() Effect of server bandwidth.

We investigate the effect of server bandwidth on the performance by

moving the server to one of the stub nodes in

Fig. 3 (a shaded node in the stub domain). Here the server

has less bandwidth than being placed in the transit

domain. Fig. 10 and Fig. 11

illustrate the rejection probability and average workload placed on

the network. Overall, the rejection probability of all three

approaches with the server at the stub domain

is higher than that with the server in the transit domain (see

Fig. 4) due to the decreased server bandwidth.

As to the average

workload on network, P2Cast again generates the most

workload.

Similarly, the admitted clients in unicast

tend to be closer to the server and this trend is even more evident

than with server in transit domain [11].

Effect of server bandwidth.

We investigate the effect of server bandwidth on the performance by

moving the server to one of the stub nodes in

Fig. 3 (a shaded node in the stub domain). Here the server

has less bandwidth than being placed in the transit

domain. Fig. 10 and Fig. 11

illustrate the rejection probability and average workload placed on

the network. Overall, the rejection probability of all three

approaches with the server at the stub domain

is higher than that with the server in the transit domain (see

Fig. 4) due to the decreased server bandwidth.

As to the average

workload on network, P2Cast again generates the most

workload.

Similarly, the admitted clients in unicast

tend to be closer to the server and this trend is even more evident

than with server in transit domain [11].