Steve Pavett, Nihal Samaraweraa, Neil M. Hamilton, and Gorry Fairhursta

Medical Faculty Computer Assisted Learning

Unit, aDepartment of Engineering,

University of Aberdeen, Scotland

|

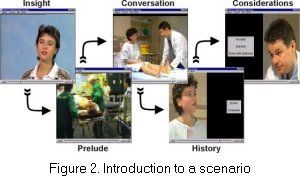

Each scenario starts with an introductory video clip and audio clip showing: insight from a patient about their experience, a preamble to the scenario, the opening conversation of the scenario, a brief patient history and points for the user to consider during the scenario (Fig. 2).

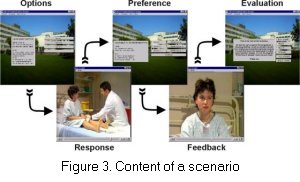

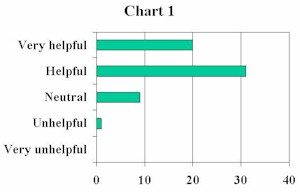

The user views three different responses given by the clinician, indicating which one they preferred. Feedback and an opportunity to change the initial preference follow this. Each scenario ends with an evaluation on a five-point scale ranging from ``Very helpful'' to ``Very unhelpful'' (Fig. 3).

The choices made by the user at various stages are logged. This information is being used both for a pedagogical study and the design of the network client. Several supporting media clips are incorporated into the final application giving a total of approximately 1.75 h of video and audio recordings.

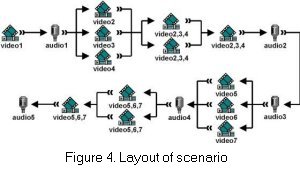

A typical scenario consists of seven video clips and five audio clips, although two scenarios include a second scene. The longest video clips corresponding to more than 100 MB of data (MPEG-2 at 4 Mbps). Where there is an option, the user is asked which clip they would like to view next, until all clips have been viewed (Fig. 4).

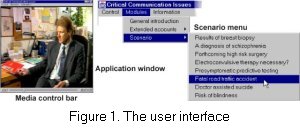

The application was broken down into three modules: user interface, network client and server. Sun Microsystems Java programming language was chosen to develop the user interface for its cross platform specification and network ready distribution method. The user interface passes requests for media to the client, both of which run concurrently on the client machine. A session server processes these requests and forwards them to a clip server. Final year medical students began using the application in late October 1997. A Web client version of the user interface was written to handle accessing media from a WWW server to study lower bit rate delivery using 1 Mbps MPEG-1. The user interface was then married to the network client software module.

It was anticipated that the local bandwidth would far exceed the capacity available in a campus network. Delivering video data simultaneously to a large number of platforms would exhaust the capacity of even a high bandwidth network with a powerful server. A network protocol has therefore been developed to mitigate the demands on the server and the network and to tolerate occasional packet loss due to network congestion.

A classroom of ten 133 MHz Pentium multimedia PCs running Microsoft Windows NT4 Workstation was set-up. Each machine was connected to a switched 10 Mbps hub. The hub receives its up-link on an ATM port (155 Mbps to the Aberdeen metropolitan area network). The video clip server is a SUN Ultra with a high performance disk array connected directly to the network by an ATM interface.

From the limited number of options the application can begin scheduling the next items for download, before they are selected. This allows for a more efficient use of bandwidth. Several concurrent threads can be started that download the optional video material. When the user makes their choice for the next clip, the appropriate thread is given a higher priority, stopping the others from executing until it has finished.

The inclusion of reviewable material places limitations on the possibilities with scheduling. If the user decides to return to a video clip, the cached version may have already been over-written, so the application must abandon its current scheduling activity to retrieve a previously viewed clip. The possible permutations of path taken to view the material are kept to a minimum. How long a clip is kept before it is overwritten is purely a matter of available disk space. In the classroom environment there is occasionally commonality between video clips that are being viewed by different students performing the same exercise. This will increase with the number of concurrent users. Unicast (point-to-point) protocols such as HTTP do not scale well within the network, taking no account of this concurrency. In order to improve the scalability of the application, a different transfer model is required.

The service was designed with three goals: the server should not be concerned with the number or location of the clients, the clients may start and stop transfers at any time and caching of parts of a video clip (blocks) may be performed by either clients or independent repair agents [1].

The service consists of two protocols: one co-ordinates the transfer of media data to each client (the session protocol), the other provides the actual transfer mechanism which transmits a clip from the server to one or more clients (the transfer protocol). These have been constructed in such a way that they may be located on a single server platform or distributed across several platforms (each instance of a transfer server can be distributed over various locations).

The transfer is started by the transmission of an announcement packet using a well-known multicast address. This packet contains the details of the transfer (file name, context, size, transfer rate, port numbers and transfer multicast address). The client uses this information to configure their transfer clients. Subsequently the Time-Line client uses Application Layer Framing (ALF) [2, 3] to uniquely identify each packet it receives as an offset from the start of the clip. Relatively large blocks are used (1.4 KB) making the overhead from AFL headers low (~2%).

The transfer protocol is receiver-based, employing a stateless server [4] in that the server is unaware of the number of receivers or the progress of individual receivers. Each receiver must take responsibility for its own data transfer. Once initiated, the server transmits an entire video clip as a sequence of blocks using UDP ensuring a specified gap between successive packets (rate control). The server may assess the bandwidth used for its transmissions periodically and recalculates a rate limit for future use.

In order to recover lost packets once the transmission is complete, each client independently determines which blocks were not received. A retransmission phase then sends these blocks to clients [5].

Maximum throughput over a switched 10 Mbps (or shared 100 Mbps) Ethernet segment is approximately 8 Mbps, with only a small amount of background traffic. On a 10 Mbps shared segment this drops to about 2 Mbps, discounting this as a suitable type of network for high quality multimedia delivery.

The session server keeps a list of all the clips currently being sent, those pending transmission and the current bandwidth commitments. The transfer server updates this information as it progresses through the schedule.

Initial user tests (n=61) have shown that the users

seem to find the application generally quite helpful (Chart 1). A

common pattern of usage is to view two or three scenarios and

some of the additional media available, constituting

approximately 30 min usage on average.

Initial user tests (n=61) have shown that the users

seem to find the application generally quite helpful (Chart 1). A

common pattern of usage is to view two or three scenarios and

some of the additional media available, constituting

approximately 30 min usage on average.

The use of MPEG-2 in this type of environment is new. More solutions for the playing back of MPEG-2 on the PC desktop will be coming to market during 1998, reducing the cost of equipping multimedia PCs with hardware decoders. DVD players are now being incorporated into top end multimedia PCs, also increasing the number of machines with MPEG-2 playback.

The project has shown that a suitable protocol may be developed to provide high-speed delivery of MPEG-2 data using this novel implementation approach in a scalable manner. When using this protocol, it is important to consider the style of network being used and the minimal specification of the client platform, which may otherwise limit the sustainable transfer rate.

A number of facets of the design of the CAL application have been discussed, including importantly, the introduction of latency into the load a single user places on the network by introducing audio clips between critical points in the applications execution. The project intends to investigate further the media requirements for classroom based teaching, particularly the organisation of material into network friendly structures. We will also attempt to quantify further the network requirements. Investigation is also required on these issues to determine their impact on different network topologies and components.