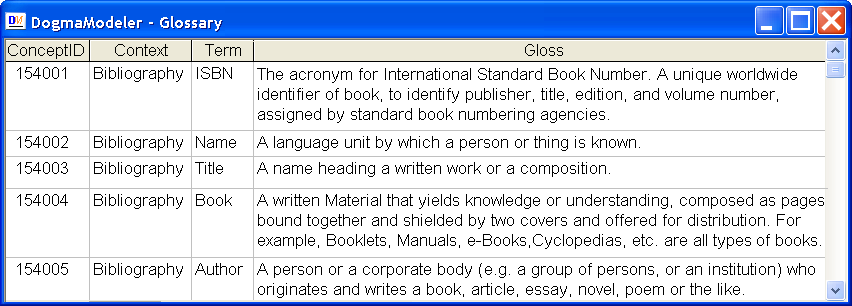

Figure 1. A list of concepts described by glosses.

An ontology in general, is a shared understanding (i.e. semantics) of a certain domain, axiomatized and represented formally in a computer resource. By sharing an ontology, autonomous and distributed applications can meaningfully communicate to exchange data and make transactions interoperate independently of their internal technologies.

The meaning in an ontology (i.e. the semantics of the vocabulary used in this ontology) is supposed to be represented in a logical form. In other words, an ontology becomes a logical theory where its logical statements (i.e. axioms)[1] are supposed to account for the intended meaning of the vocabulary.

According to Gruber [5] an ontology is "an explicit specification of a conceptualization"; referring to the extensional notion of a conceptualization as found in [10].

This motivates us to understand the relationship between a domain vocabulary and the specification of its intended meaning in a logical theory. In other words, how much of the intended meaning is captured explicitly in a logical theory. In the following we focus on two fundamental aspects:

First, in general, it is not possible to build a logical theory to specify the complete and exact intended meaning of a domain vocabulary[2]. In practice, the level of detail that is appropriate to explicitly capture and represent is subject to what is reasonable and plausible for applications. Other details will have to remain implicit assumptions. These assumptions are usually denoted in linguistic terms that we use to lexicalize concepts, and this implicit character follows from our interpretation of these linguistic terms. On the relationship between concepts and their linguistic terms Avicenna (980-1037 AC) [15] argued that:

"There is a strong relationship/dependence between concepts and their linguistic terms, change on linguistic aspects may affect the intended meaning... Therefore logicians should consider linguistic aspects (as they are). ..."[3] .

Indeed, the linguistic terms that we usually use to name symbols in a logical theory convey some important assumptions, which are part of the conceptualization (i.e. intended meaning) that underlie the logical theory. We believe that these assumptions should not be excluded or ignored, as indeed they are part of our conceptualization.

Second, unlike lexical resources, which usually are built for general purposes, when building an ontology, there will always be intended or expected usability requirements "at hand". These usability requirements influence the independency level of ontology axioms. In the problem-solving research community, this is called the interaction problem. Bylander and Chandrasekaran argue that:

"Representing knowledge for the purpose of solving some problem is strongly affected by the nature of the problem and the inference strategy to be applied to the problem." [20]

The main challenge of usability influence is that different usability perspectives (i.e. different purposes of what an ontology is made for and how it will be used) lead to different - and sometimes conflicting - axiomatizations although these axiomatizations might agree at the domain level4 [11],[12].

Hence, we share Guarino and Giaretta's viewpoint [7], that an ontology only approximates its underlying conceptualization; and that a domain axiomatization should be interpreted intensionally[5], referring to the intensional notion of a conceptualization. Guarino and Giaretta pointed out that Gruber's definition [5] does not adequately fit the purposes of an ontology. They pointed out that according to Gruber's definition, the re-arrangement of domain objects (i.e. different state of affairs) corresponds to different conceptualizations. Guarino and Giaretta argue correctly that a conceptualization benefits from invariance under changes that occur at the instance level by transitions between merely different "states of affairs" in a domain, and thus should not be extensional. Instead, they propose a conceptualization as an intensional semantic structure (i.e. abstracting from the instance level), which encodes implicit rules constraining the structure of a piece of reality. Indeed, this definition allows for the focus on the meaning of domain vocabularies (by capturing their intuitions) independently of a state of affairs. See [6] for the details and formalisms.

Not only as a matter of definition (i.e. of what an ontology is and how its axioms are interpreted), but also representing semantics in a logical theory triggers other ontology engineering challenges. For example, the maintenance of (specially large-scale and multi-domain) ontologies becomes a complex process because understanding the meaning of an individual vocabulary needs one to browse and understand many different formal axioms. In case of lightweight ontologies (e.g. with a minimum number of axioms, where some critical assumptions about the meaning remain implicit), it will be more difficult for different ontology developers and maintainers, to know what was originally intended, or what the modeling decisions and choices were.

Accordingly, in order to achieve efficient maintenance and evolution, critical assumptions that make clear the factual meaning of an ontology vocabulary should be rendered and included in the ontology, even if informally, to facilitate both users' and developers' commonsense perception of the subject matter. It is important, not only for future maintenance but also advised for the collaborative and distributed development of ontologies.

In this paper, we propose that (in addition to formal axioms) each vocabulary in an ontology should have a gloss (see section 2). For ontology engineering purposes, we shall define a gloss as an informal (but controlled) description that accounts for the factual meaning of a vocabulary. A set of guidelines on what should and should not be provided in a gloss shall also be presented.

Furthermore, in section 3 we propose to incorporate existing linguistic resources in the ontology modeling process. We will clarify the importance of using lexical resources as a consensus reference in ontology engineering (for investigating and rooting concepts), and so enabling the adoption of the glosses found in these resources. We present an ontology engineering tool (called DogmaModeler), and illustrate its adoption of WordNet's glosses in ontology modeling.

In addition to its formal definition, we propose that each concept in an ontology to be also described by a gloss. A gloss is an auxiliary informal description for the commonsense perception of humans of the intended meaning of a linguistic term. See Figure 1. An ontology, in this way, will have twofold parts: its typical formal axioms (i.e. concepts, relations, and rules/constraints), and informal descriptions (i.e. glosses of concepts).

The purpose of a gloss is not to provide or catalogue general information and comments about a concept, as conventional dictionaries and encyclopedias do [14]. A gloss, for formal ontology engineering purposes, is supposed to render factual knowledge that is critical to understanding a concept, but that is unreasonable, implausible, or very difficult to formalize and/or articulate explicitly.

Figure 1. A list of concepts described by glosses.

The following are some guidelines to consider when deciding what should and should not be provided in a gloss.

1.It should start with the principal/super type of the concept being defined. For example, "Search engine: A computer program that ...", "Invoice: A business document that..."University: An institution of ...".

2.It should be written in the form of propositions, offering the reader inferential knowledge that helps him to construct the image of the concept. For example, instead of defining 'Search engine' as "A computer program for searching the internet", or "One of the most useful aspects of the World Wide Web. Some of the major ones are Google, Galaxy... .". One can also say "A computer program that enables users to search and retrieve documents or data from a database or from a computer network...".

3.More importantly, it should focus on distinguishing characteristics and intrinsic properties that differentiate the concept from other concepts. For example, compare the following two glosses of a 'Laptop computer': (1) "A computer that is designed to do pretty much anything a desktop computer can do. It runs for a short time (usually two to five hours) on batteries"; and (2) "A portable computer small enough to use in your lap...". Notice that according to the first gloss, a 'server computer' running on batteries can be seen as a laptop computer; also, a 'Portable computer' that is not running on batteries is not a 'Laptop computer'.

4.The use of supportive examples is strongly encouraged: (1) to clarify true cases that are commonly known to be false, or false cases that are known to be true; and (2) to strengthen and illustrate distinguishing characteristics (by using examples and counter-examples). The examples can be types and/or instances of the concept being defined. For example: "Legal Person: An entity with legal recognition in accordance with law. It has the legal capacity to represent its own interests in its own name, before a court of law, to obtain rights or obligations for itself, to impose binding obligations, or to grant privileges to others, for example as a plaintiff or as a defendant. A legal person exists wherever the law recognizes, as a matter of policy, the personality of any entity, regardless of whether it is naturally considered to be a person. Recognized associations, relief agencies, committees and companies are examples of legal persons".

5.It should be consistent with the formal axioms in the ontology. In other words, what have said in a gloss should not contradict the formal axioms, and vice versa.

6.It should be sufficient, clear, and easy to understand[6].

One may notice that the information provided in a gloss can be translated, in principle, into formal axioms. However, recall that both should be seen and used in complement rather than as alternatives.

Glosses play a significant role during the ontology development, deployment, and evolution phases. As ontologies are being developed, reviewed, used, and maintained by many different people over different times and locations. Indeed, glosses are easier to understand and agree on than formal definitions, especially for non-intellectual domain experts. Glosses are a useful mechanism for understanding concepts individually without needing to browse and reason on the position of concepts within an axiomatized theory. Further, compared with formal definitions, glosses help to build a "deeper" intuition about concepts, by denoting implicit or tacit assumptions.

This approach has been applied in the CCFORM project (IST-2001-34908, 5th framework) where we have led the ontology development task, for developing a Customer Complaint Ontology (CCOntology) [11],[13]. Intensive discussions were carried out (by legal experts, market experts, application-oriented experts) for almost every gloss. We have found that the gloss modeling process is a great mechanism for brainstorming, domain analyses, domain understanding and for reaching (and documenting) consensus. Our approach to build this CCOntology was: 1) define the glosses of the main concepts, build the ontology (i.e. the formal part), 3) refine and extend the glosses. In other words, the glosses were developed (and reviewed) over several iterations. The first iteration was accomplished by a few (selected) experts before starting to build the formal part of the ontology. Further iterations have been carried out in parallel with the formal part. The final draft was reviewed and approved by several topic panels. Notice that in this way, we also allowed non-ontology experts and lexicographers to participate actively in the ontology modeling and reviewing process. Some partners have even noted that the glosses are the most useful and reusable[7] component in the ontology. The glosses, which have been developed in English, have played the role of the key reference for lexicalizing the ontology into 11 other European languages. Translators have acknowledged that it guided their understanding of the intended meanings of the terms and allowed them to achieve better translation quality. See [11] for the details and lessons learnt.

Important lessons on "term documentation" can also be learned from another approach[19] for building an enterprise ontology.

In this section we present the second goal of the paper. We shall discuss the role of a linguistic resource in ontology engineering: 1) as consensus reference for investigating and rooting ontology concepts, and 2) as a resource of glosses.

One may wonder how ontology builders investigate the meaning of a vocabulary and how a consensus can be reached about it. As we have discussed earlier, this process usually is influenced by usability perspectives and requirements at hand (i.e. why this meaning is axiomatized for, and how it will be used). Many researchers also admit that a conceptualization reflects a particular viewpoint and that it is entirely possible that every person has his own concepts. For example, Bench-Capon and Malcolm argued in [2] that conceptualizations are likely to be influenced by personal tastes and may reflect fundamental disagreements. In our opinion, herein lies the importance of linguistic resources.

Linguistic resources (such as lexicons, dictionaries, and glossaries) can be used as consensus references to root ontology concepts. In other words, ontology concepts and axioms can be investigated using such linguistic resources and it can be determined whether a concept is influenced by personal tastes or usability perspectives. We explain this idea further in the following paragraphs.

The importance of using linguistic resources in this way lies in the fact that a linguistic resource renders the intended meaning of a linguistic term as it is commonly "agreed" among the community of its language. The set of concepts that a language lexicalizes through its set of word-forms is generally an agreed conceptualization[8] [18]. For example, when we use the English word 'book', we actually refer to the set of implicit rules that are common to English-speaking people for distinguishing 'books' from other objects. Such implicit rules (i.e. concepts) are learned and agreed from the repeated use of word-forms and their referents. Usually, lexicographers and lexicon developers investigate the repeated use of a word-form (e.g. based on a comprehensive corpus) to determine its underlying concept(s) [1],[16].

For example, suppose in a Bibliography ontology -that captures the notions of Book, Publisher, etc.- you find an axiom stating that "each book must have an ISBN value". Does this axiom really account for the intended meaning of the concept Book? Is this axiom is a necessary property for each book in the world, or is it only mandatory for some applications (e.g. online bookstores). Given the definition of the term 'book' found in WordNet (a written work or composition that has been published, printed on pages bound together), one can judge that an ISBN is not a necessary property for every instance of a book, because e.g. manuals or master theses are books but they do not have ISBN values. Therefore, the notion of ISBN cannot be used as a differentiating criterion to define the concept Book. Notice that such judgments cannot be based on the literal interpretation of the term definition, but should be based on the intuition that such short definitions provide.

Although linguistic resources do not represent absolute agreements on or correctness of meanings, but (from our methodological viewpoint) they do improve the quality of the ontological definitions. For more precision, one may use several linguistic resources to investigate and root ontology concepts.

In short, a way preventing ontology builders from imposing their personal viewpoints and usability perspectives at the conceptual level is, by investigating and rooting the ontology concepts at the level of agreed human language conceptualization. This involves making a distinction between a personal viewpoint and a community viewpoint. By doing this, we are (indirectly) investigating and rooting our ontology concepts at the domain level, because the conceptualization of a language emerges from the repeated use of linguistic terms and their referents in real life domains.

Our approach allows for the adoption and reuse of many available lexical resources. Lexical resources (such as lexicons, glossaries, thesauruses, and dictionaries) are indeed important resources of domain concepts. Some resources focus mainly on the morphological issues of terms, rather than categorizing and clearly describing their intended meanings. Depending on its description of term meaning(s), its ontological accuracy, and conceptual structure (i.e. the discrimination of term meanings in a machine-referable manner, such as WordNet synsets), a lexical resource can play an important role in ontology engineering.

Using lexical resources (a) as sources of glosses and (b) as shared vocabulary spaces, could be seen as an attachment law for ontology engineering.

An important lexical resource that is organized by word meanings (i.e. concepts, or called synsets) is WordNet [14]. WordNet offers a machine-readable and comprehensive conceptual system for English words. Currently, a number of initiatives and efforts in the lexical semantic community have been started to extend WordNet to cover multiple languages; see the Global WordNet Association[9]. As we have discussed earlier, the consensus about domain concepts can be gained and realized by investigating these concepts at the level of a human language conceptualization. This can be practically accomplished by adopting the informal description of term meanings that can be found in lexical resources such as WordNet[10], as glosses. Notice that this enables a lexical resource to be a vocabulary space for the ontologies sharing its definitions.

In what follows we illustrate DogmaModeler's support of concept modeling. We focus only on the issues discussed in this paper. Other functionalities of DogmaModeler are noted at the end of this section.

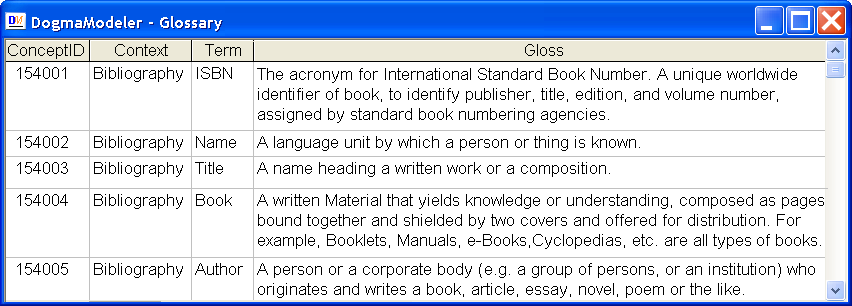

When introducing a new concept, ontology builders should define its gloss. Figure 2 shows the concept-modeling window in DogmaModeler, with an example of the term 'Book' and its gloss.

Figure 2. Concept modeling window.

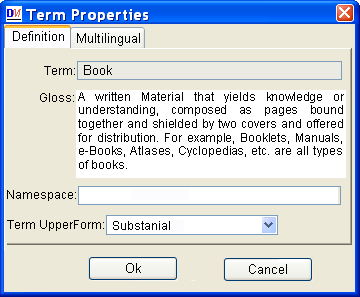

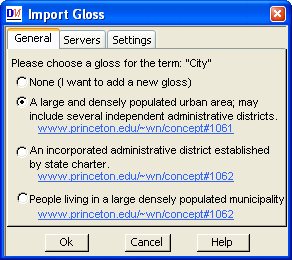

To enable the adoption and reusability of linguistic resources, Figure 3 shows a screenshot of a menu of glosses of the term 'City', which are retrieved from WordNet. The idea is that after introducing a new term (i.e. typing a term in the 'Term' field in Figure 1), the DogmaModeler automatically offers a menu of glosses for this term, as in Figure 3. Ontology builders can then, choose or define a new gloss. If an existing gloss has been chosen, a reference to this gloss is recorded in the "Namespace" field, see the links shown in Figure 3. In fact, the notion of Namespace here is a typical one, such as in RDF. In other words, when building a new ontology, in this way, the definitions of concepts found in linguistic resources are adopted and reused, through the URIs in the Namespace fields.

In practice, ontology builders only introduce a new gloss for a term, if this term is not found in the "supported" linguistic resources, or in case that the retrieved glosses are not accurate or suitable.

Recall that the notion of gloss is not intended to catalog general information or to provide morphological issues about a term, as conventional dictionaries usually do. As we have discussed in section 2, a gloss has a strict intention in our approach and not just any lexical resource can be adopted. The lexicon should providea clear discrimination of word/term meaning(s) in a machine-referable manner, much like the synsets in WordNet.

Figure 3: Incorporating existing lexical resources in gloss modeling.

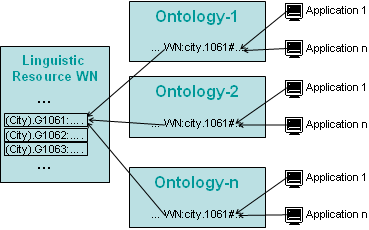

The main goals of incorporating linguistic resources in ontology engineering in this way is not only to save time in writing glosses, but mainly to communalize the word/term senses (i.e. domain concepts) that can be found in these resources, see Figure 4. In other words, we aim to reuse linguistic resources as shared vocabulary spaces in ontology engineering. In this way, semantic interoperability between different ontologies can also be enabled. For example, by using (euro)WordNet synsets [14] as a shared vocabulary space, different ontologies will be able to interoperate (or they can be easily integrated) at least freely from language ambiguity and multilingualism.

Remark: DogmaModeler is software tool for modeling and engineering ontologies [11]. It supports among other things: (1) the development, browsing, and management of domain and application axiomatizations, and axiomatization libraries; (2) the modeling of application axiomatizations using the ORM graphical notation, and the automatic generation of the corresponding ORM-ML; (3) the verbalization of application axiomatizations into pseudo natural language (supporting flexible verbalization templates for English, Dutch, Arabic, and Russian, for example) that allows non-experts to check, validate, or build axiomatizations; (4) the automatic composition of axiomatization modules, through a well-defined composition operator; (5) the validation of the syntax and semantics of application axiomatizations; (6) the incorporating of linguistic resources in ontology engineering; (7) a simple approach of multilingual lexicalization of ontologies; (8) the automatic mapping of ORM schemes into X-Forms and HTML-Forms; etc.

Figure 4. A simplified example of communalizing term senses (/concepts) found in linguistic resources.

In this paper we have introduced the notion of gloss for ontology engineering purposes. In addition, we have discussed the significance of glosses in the practice of ontology engineering. The incorporation of linguistic resources in ontology engineering has been discussed and illustrated.

We plan to implement a full adoption and adaptation of WordNet-alike lexicons into DogmaModeler. In addition, as gloss has a strict intention in our approach and so that not every lexical resource can be adopted, we plan to investigate how other kinds of lexicons and dictionaries such as the Cambridge dictionary can be ontologized and adopted. We aim to extract and re-engineer their meaning descriptions into machine-referable glosses, and so excluding the typical morphological and lexical issues.

We plan to investigate how much the process of writing or validating glosses can be (semi-)automated. For example, given the formal part of the ontology, a gloss can be parsed to know whether it starts with the principal/super type of the concept being defined. An other opportunity to is measure the lexical stability of each term among the ontology [3].

We are in debt to Robert Meersman, Michael Uschold, Fausto Giunchiglia, Andriy Lisovoy, and the anonymous reviewers for their comments, discussion, and suggestions on the earlier version of this work.

[1] Brejova, B., DiMarco, C., Vinar, T., Hidalgo, S. R., Holguin, G. and Patten, C.: Finding Patterns in Biological Sequences. Unpublished project report for CS798G, University of Waterloo, Fall 2000.

[2] Bench-Capon T.J.M., Malcolm, G.: Formalising Ontologies and Their Relations. Proceedings of DEXA'99. (1999) pp. 250-259

[3] Coen, G.: Database Lexicography. In the Journal of Data Knowledge Engineering. Volume 42, Number 3. 293-314 (2002).

[4]Guarino, N.: The Ontological Level. In R. Casati, B. Smith and G. White (eds.), Philosophy and the Cognitive Science. Hölder-Pichler-Tempsky, Vienna: 443-456. (1994)

[5]Gruber, T.: Toward principles for the design of ontologies used for knowledge sharing. International Journal of Human-Computer Studies, 43(5/6) (1995)

[6]Guarino, N.: Formal Ontology in Information Systems. Proceedings of FOIS'98, IOS Press, Amsterdam. (1998) pp. 3-15

[7]Guarino, N. and Giaretta, P.: Ontologies and Knowledge Bases: Towards a Terminological Clarification, in: Towards Very Large Knowledge Bases: Knowledge Building and Knowledge Sharing, N. Mars (ed.), pp 25-32, IOS Press, Amsterdam (1995).

[8]Gangemi, A., Guarino, N., Borgo, S.: Conceptual Analysis of Lexical Taxonomies: The Case of WordNet Top-Level. In the second International Conference on Formal Ontology in Information Systems, FOIS 2001, Ogunquit, Maine, USA.Proceedings. ACM, 2001

[9]Gangemi, A., Guarino, N., Oltramari, A., Borgo, S.: Cleaning-up WordNet's Top-Level. In: Proceedings of the 1st International WordNet Conference. (2002)

[10]Genesereth, M.R., Nilsson, N.J.: Logical Foundation of Artificial Intelligence. Morgan Kaufmann. Los Altos, California. (1987)

[11]Jarrar, M.: Towards Methodological Principles for Ontology Engineering. PhD thesis, Vrije Universiteit Brussel, 2005.

[12]Jarrar, M., Demey, J., Meersman, R. On Using Conceptual Data Modeling for Ontology Engineering. In: Aberer K., March S., and Spaccapietra S., (eds.): Journal on Data Semantics, Special issue on "Best papers from the ER/ODBASE/COOPIS 2002 Conferences", LNCS Vol. 2800, Springer. ISBN: 3-540-20407-5. October (2003) pp. 185-207

[13]Jarrar, M., Verlinden, R., Meersman, R.: Ontology-based Customer Complaint Management. In: Jarrar M., Salaun A., (eds.): Proceedings of the workshop on regulatory ontologies and the modeling of complaint regulations, Catania, Sicily, Italy. Springer Verlag LNCS. Vol. 2889. November (2003) pp. 594-606

[14]Miller, G. Beckwith, R., Fellbaum, F., Gross, D., Miller, K.: Introduction to wordnet: an on-line lexical database. International Journal of Lexicography, 3(4). (1990) pp. 235-244

[15]Qmair, Y.: Foundations of Arabic philosophy. Dar El-Machreq, Beirut. ISBN 2-7214-8024-3. (1991)

[16]Rigoutsos, I., Floratos, A., Ouzounis, C., Gao, Y. & Parida, L.: Dictionary building via unsupervised hierarchical motif discovery in the sequence space of natural proteins. Proteins: Struct. Funct. Genet. 37. (1999) pp. 264-277.

[17]Shapiro, S.: Propositional, First-Order And Higher-Order Logics: Basic Definitions, Rules of Inference, Examples. In: Iwanska, L., Stuart, S., Shapiro, (eds.): Natural Language Processing and Knowledge Representation: Language for Knowledge and Knowledge for Language. AAAI Press/The MIT Press, Menlo Park, CA. (1995)

[18]Temmerman, T.: Towards New Ways of Terminology Description, the sociocognitive approach. John Benjamins Publishing Company. Amsterdam. ISBN 9027223262. (2000)

[19]Uschold, M., King, M., Moralee, S., Zorgios, Y.: The Enterprise Ontology The Knowledge Engineering Review, In: Uschold, M., Tate, A. (eds.): Special Issue on Putting Ontologies to Use. Vol. 13. (1998)

[20]Bylander, T., Chandrasekaran, B.: Generic tasks in knowledge-based reasoning: The right level of abstraction for knowledge acquisition. In: Gaines B., Boose, J. (eds.): Knowledge Acquisition for Knowledge Based Systems. Vol. 1. Academic Press, London. (1988) pp. 65-77

[1] Hence, we sometimes use the term "domain axiomatization" and "ontology" to refer to the same thing.

[2] This is because of the large number of axioms and details that need to be intensively captured and investigated, such detailed axiomatizations are difficult -for both humans and machines- to compute and reason on, and they might hold "trivial" assumptions.

[3] This is an approximated translation from Arabic to English.

[4] For example, when integrating two ontologies (even they capture the same domain entities) one may find formal conflicts and disagreements between them, only because of the differences in their usability perspectives, different granularity, representation primitives and constructs (i.e. epistemology [4]), purpose, application, context, scope boundaries, etc.

[5] On the deference between "extensional" and "Intensional" semantics, we use the following simple description that we found in [17]: "The extensional semantics (value or denotation) of the expressions of a logic are relative to a particular interpretation, model, or situation. The extensional semantics of CarPool World, for example, are relative to a particular day. The denotation of a proposition is either True or False. If P is an expression of some logic, we will use [[P]] to mean the denotation of P. If we need to make explicit that we mean the denotation relative to situation S, we will use [[P]]S. The intensional semantics (or intension) of the expressions of a logic are independent of any specific interpretation, model, or situation, but are dependent only on the domain being conceptualized. If P is an expression of some logic, we will use [P] to mean the intension of P. If we need to make explicit that we mean the intension relative to domain D, we will use [P]D. Many formal people consider the intension of an expression to be a function from situations to denotations. For them, [P]D(S) = [[P]]S. However, less formally, the intensional semantics of a wfp can be given as a statement in a previously understood language (for example, English) that allows the extensional value to be determined in any specific situation.".

[6] There is more to say on how to define a gloss; we limited ourselves in this paper to present the most relevant issues.

[7] The reusability here is gained, in our opinion, because glosses are free from a certain formal knowledge structure, i.e. epistemology.

[8] Thus, we may view a lexicon of a language as an informal ontology for its community.

[9] http:/www.globalwordnet.org/ (visited, January 2005).