|

Providing satisfactory web performance in mobile networks is challenging due to the long latency and low bandwidth in these networks. In this paper, we develop ACME (Architecture for Content delivery in the Mobile Environment), which efficiently implements edge caching functionality in terminals by exploiting user interest correlation. By selectively pushing content to users most likely to request it in the future, ACME not only increases hit ratio, but also reduces terminal power consumption by 17 to 144 times in trace-driven simulations, compared to pushing content to all users. ACME also exhibits superior scalability, as the push group size grows only 62% when the number of users increases 16 times in different traces.

Content delivery, mobile web access, web browsing, edge caching, user interest correlation.

Satisfactory mobile web performance is essential to the success of emerging 2.5G and 3G mobile networks as mobile operators rely on the mobile web and other data applications to generate new revenues. However, ensuring adequate mobile web performance is uniquely challenging. In particular, link layer retransmissions, usually necessary in mobile networks to compensate for the error-prone air interface [1], lead to long and unpredictable latency. For example, it was shown that the PING round trip time from a terminal to a web server increased by more than 20 times when a wireline link was replaced by an emulated heavily loaded GPRS link [1]. Such long latencies are particularly detrimental to web interactivity and user experience, which typically deteriorates if the web page download time takes more than eight seconds. Increasingly rich web content and low bandwidth in mobile networks make this problem even worse.

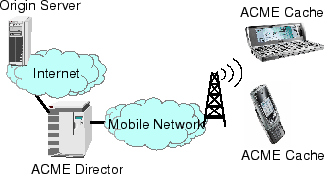

In this paper, we develop an architecture called ACME (Architecture for Content delivery in the Mobile Environment) that significantly enhances mobile web performance. This architecture, shown in Figure 1, is novel in two aspects. First, unlike traditional edge caching, which serves content from network edge, ACME implements edge caching functionality in the ACME cache at the terminal. This approach minimizes the use of the bottleneck (the radio link) and substantially reduces user perceived latency. Since edge caching is a superset of client caching and stores popular content requested by all users, it is necessary to push non-requested content to terminals. Specifically, implementing full-fledged edge caches in terminals requires pushing each requested web object to all terminals, as done in [3]. For mobile terminals, however, this approach would consume excessive terminal power and storage resources. Toward this end, we develop the concept of user interest correlation. User interest correlation refers to the common interest in the same content among different users, and it is the foundation of edge caching effectiveness. It has also been studied in the context of peer-to-peer content discovery [4]. The ACME Director shown in Figure 1 computes user interest correlation based on previous user access patterns, and selectively pushes the web object being requested by one user to other users who are most likely to request it in the future. We describe the ACME Director's algorithm and evaluate its performance using trace-driven simulations in Section 2.

We use conditional probability to measure user interest

correlation. For ![]() users, let

users, let ![]() be the

be the ![]() -by-

-by-![]() matrix such

that

matrix such

that

![]() , where

, where ![]() denotes the probability that

user

denotes the probability that

user ![]() will access a web object in the future, given user

will access a web object in the future, given user ![]() has accessed that object.

has accessed that object. ![]() is defined to be zero because

this case is handled by conventional client caching and is

irrelevant to ACME. In practice,

is defined to be zero because

this case is handled by conventional client caching and is

irrelevant to ACME. In practice, ![]() can be trained using past web

access data, as discussed in Section 2.2.

can be trained using past web

access data, as discussed in Section 2.2.

Once the ACME Director has a properly trained ![]() matrix, it

performs selective push as follows. First, we use a tunable

parameter called the ``selectiveness factor''

matrix, it

performs selective push as follows. First, we use a tunable

parameter called the ``selectiveness factor'' ![]() (

(

![]() ) to control push ``selectiveness''. Suppose user

) to control push ``selectiveness''. Suppose user ![]() requests a web object, and

requests a web object, and ![]() is among the largest

is among the largest ![]() elements in

elements in ![]() , then the ACME Director pushes the content to

user

, then the ACME Director pushes the content to

user ![]() . Obviously,

. Obviously, ![]() represents the case of

conventional client caching, and

represents the case of

conventional client caching, and ![]() represents the case of

full edge caching, i.e., every terminal receives a copy of the

requested content. The design goal of the ACME Director is to

achieve high hit ratio (close to that of full edge caching) with a

small

represents the case of

full edge caching, i.e., every terminal receives a copy of the

requested content. The design goal of the ACME Director is to

achieve high hit ratio (close to that of full edge caching) with a

small ![]() to reduce terminal power consumption.

to reduce terminal power consumption.

Due to the lack of publicly available mobile web traces, we use three wireline proxy traces [2] for simulations and exclude all dynamically generated and non-cacheable responses. The UCB trace is the first 300,000 accesses in UC Berkeley's home IP access, representing about 17 hours of activity from 2155 IP addresses. The BU trace is the concatenated access log of Room B19 in Boston University's computer science department in March and April 1995, with a total of 553 users and 437,861 web accesses. The NLANR trace is the access log of the pb node of the National Laboratory for Advanced Network Research on November 13, 2000, with a total number of 127 user IP addresses and 878,085 web accesses.

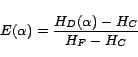

To quantitatively measure the ACME Director's performance, we note

that its hit ratio is upper-bounded by full edge caching and

lower-bounded by client caching. Its hit ratio is also dependent

on ![]() . So we define the Director Effectiveness,

. So we define the Director Effectiveness,

![]() , as

, as

We define the push group size ![]() as the average number of

terminals that receive a particular pushed web object for

selectiveness factor

as the average number of

terminals that receive a particular pushed web object for

selectiveness factor ![]() . Because average terminal power

consumption is proportional to

. Because average terminal power

consumption is proportional to ![]() , we use

, we use ![]() to

represent average terminal power consumption.

To compare the group size between different traces, we define

relative push group size,

to

represent average terminal power consumption.

To compare the group size between different traces, we define

relative push group size, ![]() , as

, as

Now we run simulations driven by the traces described above to

determine ![]() ,

, ![]() and

and ![]() . We partition

each trace into a training part (odd-numbered accesses) and a test

part (even-numbered accesses). We count the number of requests

that user

. We partition

each trace into a training part (odd-numbered accesses) and a test

part (even-numbered accesses). We count the number of requests

that user ![]() makes after user

makes after user ![]() in the training part and obtain

in the training part and obtain

![]() , for

, for ![]() , and normalize the matrix. We then

run the test part of each trace in a ACME Director simulator and

measure the hit ratios under different selectiveness factors.

, and normalize the matrix. We then

run the test part of each trace in a ACME Director simulator and

measure the hit ratios under different selectiveness factors.

![]() is then computed based on

is then computed based on ![]() ,

, ![]() and

and

![]() . We obtain

. We obtain ![]() by counting the number of terminals

receiving pushed object, for all requested objects, and compute

by counting the number of terminals

receiving pushed object, for all requested objects, and compute

![]() by normalization.

by normalization.

Figure 2 depicts Director Effectiveness ![]() against relative push group size

against relative push group size ![]() by varying

by varying ![]() from 0 to 1 for all three traces. ACME's effectiveness is evident

from the figure, as

from 0 to 1 for all three traces. ACME's effectiveness is evident

from the figure, as ![]() increases rapidly with very small

group size. In all three traces, a Director Effectiveness of 80%

is achieved at a relative push group size smaller than 20%.

Furthermore, ACME Director achieves 50% Effectiveness when the

relative push group size is as small as 0.7% (UCB trace) to 6%

(NLANR trace) of the full edge caching push group size. The push

group size ranges from 6.74 (NLANR trace) to 10.96 (UCB trace).

The reduction in average terminal power consumption is calculated

as the inverse of the relative push group size, and the ACME

Director reduces the terminal power consumption by 17 (NLANR

trace) to 144 times (UCB trace) while maintaining 50%

Effectiveness.

increases rapidly with very small

group size. In all three traces, a Director Effectiveness of 80%

is achieved at a relative push group size smaller than 20%.

Furthermore, ACME Director achieves 50% Effectiveness when the

relative push group size is as small as 0.7% (UCB trace) to 6%

(NLANR trace) of the full edge caching push group size. The push

group size ranges from 6.74 (NLANR trace) to 10.96 (UCB trace).

The reduction in average terminal power consumption is calculated

as the inverse of the relative push group size, and the ACME

Director reduces the terminal power consumption by 17 (NLANR

trace) to 144 times (UCB trace) while maintaining 50%

Effectiveness.

Another interesting finding from our simulations is that for a

wide range of Director Effectiveness values, the relative push

group size decreases with the increasing number of users among

traces. This indicates that the ACME push group size is much less

sensitive than the full edge caching push group size, to the

vastly different number of users in the three traces. Indeed, at

50% Effectiveness, the push group size increases only 62%, from

6.74 to 10.96, as ![]() increases 16 times from 127 in the NLANR

trace to 2155 in the UCB trace. We conjecture that this is because

for a given user, her interest in content can be best represented

by a small group of users whose interests are closest to hers. The

size of this interest group grows much slower than the general

user group because it only keeps the users with the closest

interest in the general user group, and identifying this group can

help to create scalable and efficient content multicast systems.

increases 16 times from 127 in the NLANR

trace to 2155 in the UCB trace. We conjecture that this is because

for a given user, her interest in content can be best represented

by a small group of users whose interests are closest to hers. The

size of this interest group grows much slower than the general

user group because it only keeps the users with the closest

interest in the general user group, and identifying this group can

help to create scalable and efficient content multicast systems.

ACME is a versatile architecture and can be implemented in a variety of networks. It can utilize multicast (such as MBMS in 3GPP) for efficient delivery, if such capability exists. In this case, ACME could use transport protocols similar to those studied in [3]. In other networks, ACME can utilize existing push mechanisms to implement edge caching. The split proxy architecture of ACME makes it possible to implement optimal transport protocols between the ACME Director and ACME caches, and provides full transparency to web applications at the origin server and the clients.

The authors would like to thank Gang Xu, Dimitris Koulakiotis, Duane Wessels and Antti Toskala for valuable information and comments.