In order to evaluate the efficiency of the proposed schemes, we developed a model which is similar to [1]. We assume that an MT can not only connect to the Internet but also can forward a message for communication with other MTs via wireless LAN (e.g. IEEE 802.11). We have done extensive simulation to analyze various performance metrics. Here we include a subset of the results due to space limitation. For additional results, please refer to [2].

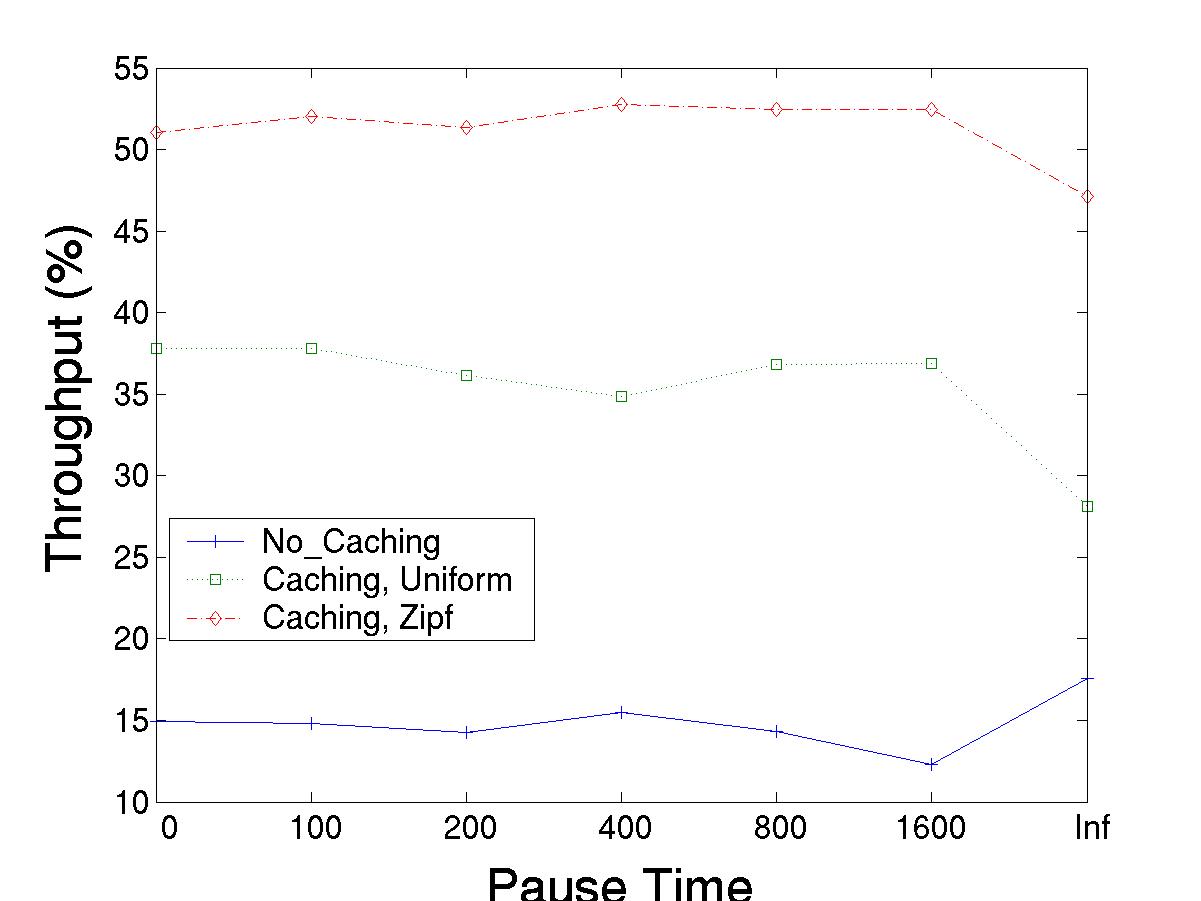

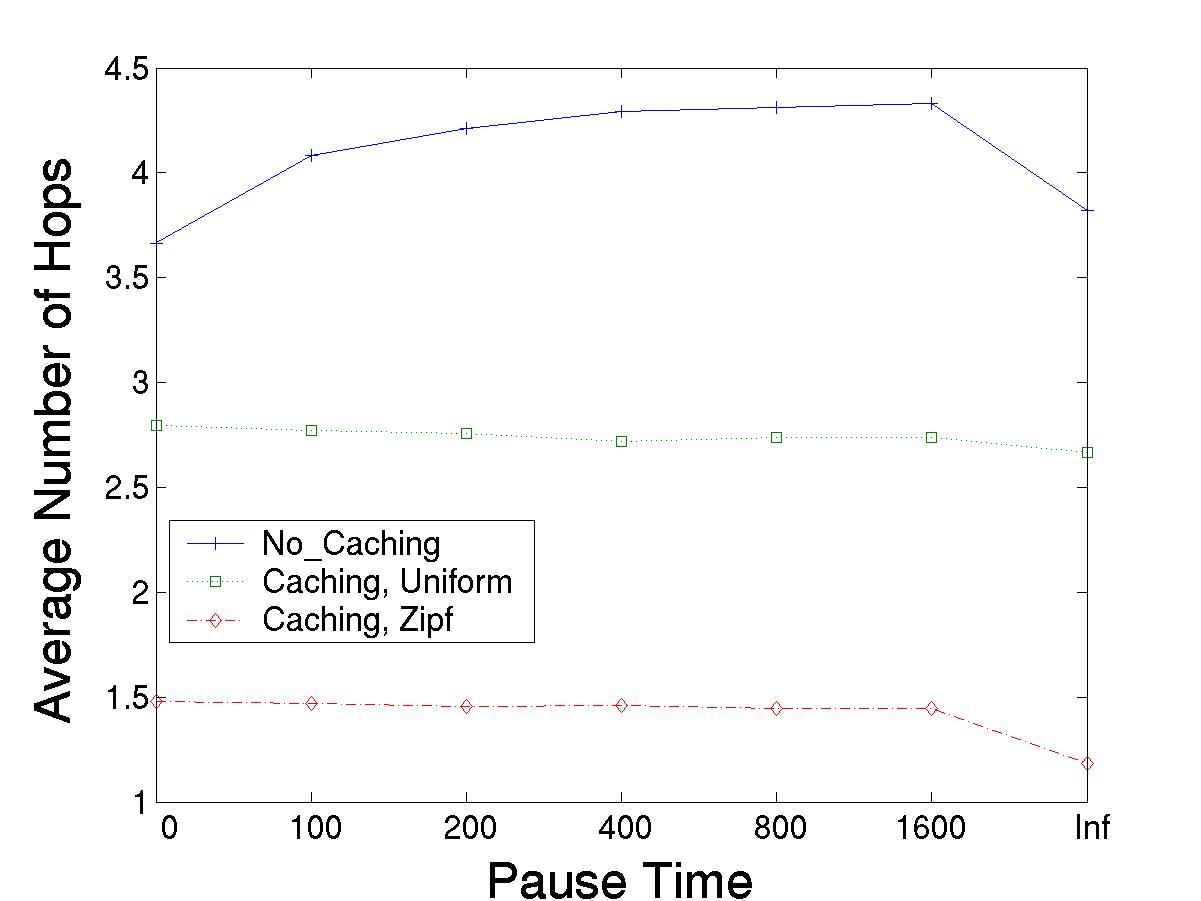

In Figure 1(a), data accessibility is greatly improved when we use the aggregate cache. Throughput is increased more than twice compared to the no cache case. With caching, there is a high probability of the requested data items being cached in the MT's local cache or at other MTs'. Even though a set of MTs is isolated from the AP, in contrast to the no cache case, they still try to access the cached data items among them. Note that almost 200% improvement is achieved compared to the no cache case when data access pattern follows Zipf distribution. Figure 1(b) shows the effect of the aggregate cache on the average latency. Since a request can be satisfied by any one of the MTs located along the path in which the request is relayed to the AP, unlike to the no cache case, data item can be accessed much faster. As expected, latency is reduced by more than 50% with caching. The results demonstrate the effectiveness of aggregate caching schemes.