Presenting interactive timed media onto distributed networked devices challenges current media technologies. This paper presents an architecture in which the interactive presentation is scripted in StoryML, an XML-based language, and presented to multiple interface devices organized in a hierarchy.

Distributed media, distributed interfaces, architectures.

New technologies, such as MPEG-4, SMIL, enable delivering interactive multimedia content to the consumers' homes. Using physical interface devices, a more natural environment in which real-life stimuli for all the human senses are used, will give people more feeling of engagement [1]. The work presented here focuses on how to structure the system and content to support distributed interfaces for timed media applications. The carrier for this work is an interactive storytelling application (TOONS) for children (age 8-12) in the NexTV project sponsored by the European Commission under the IST-programme.

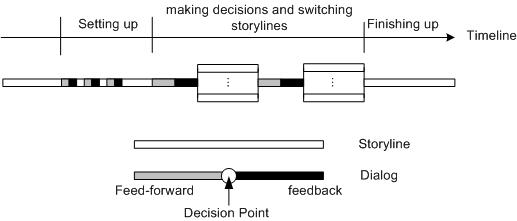

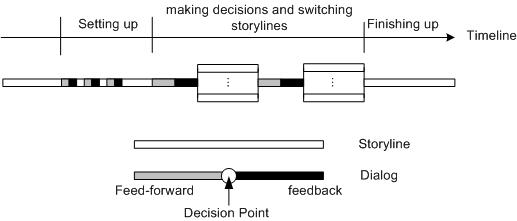

Figure 1 shows the conceptual model of an interactive story. This model consists of storylines and dialogs. The storylines are the non-interactive parts of the video stream. The dialogs comprise the parts in which the users make decisions to switch between storylines. The application plays the media objects on and gets user responses from several networked devices in the user's environment.

Figure 1. Conceptual model of TOONS

According to the requirements, the system architecture should emphasize and support the following: (1) Distributed interfaces require that not only the content presentation, but also the user input and control are distributed over the networked environment. (2) The story will be presented to different user environments which configurations may change over the time. (3) Not only the media, but also the user interactions are timed and should be synchronized.

We developed a framework for presenting interactive story onto distributed devices. The TOONS application was build based on this framework, presenting the story to a television screen, a toy robot, ambient lights and surrounding audio devices.

The TOONS application requires the documentation technology to deal with the distribution of the interaction and the media objects over multiple presentation devices. The Binary Format for Scenes (BIFS) based MPEG-4 [2] documentation emphasizes the composition of media objects on one rendering device, but not more than one. SMIL 2.0 [3] introduces the MultiWindowLayout module, which contains elements and attributes providing for creation and control of multiple top level windows. This is very promising and comes closer to the requirements of distributed presentation and interaction. Although these top level windows are supposed to be on the same rendering device, they can to some extent be recognized as interfacing components with the same capability, but not different ones.

StoryML is developed for scripting the interactive story [4]. The unknown configuration of the environments requires the presentation to be described at a high level of abstraction.

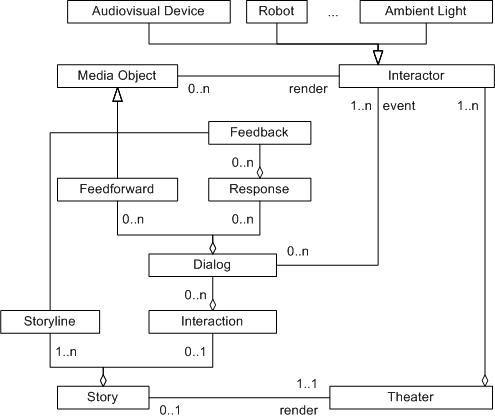

We model the interactive media presentation as an interactive Story presented in a desired environment (called a Theater). The story consists of several Storylines and a definition of possible user Interaction during the story. User interaction can result in switching between storylines, or changes within a storyline. Dialogues make up the interaction. A dialogue is a linear conversation between the system and the user, which in turn consists of Feed-forward objects, and the Feedback objects depending on the user's Response. The environment may have several Interactors. The interactors render the media objects. And finally, the story is rendered in a Theater. Figure 2 shows the object-oriented model of the StoryML. A sample code is given in the appendix.

Figure 2. Object oriented model of a presentation

An Interactor is a self-contained autonomous agent which has an expertise of data processing and user interaction. It is able to abstract the user inputs as events and to communicate with other interactors. An Interactor element in StoryML has a "type" attribute. It defines the basic requirement for the capabilities of the Interactor, at an abstract lever.

A Theater is defined as a collection of several these cooperative Interactors. The Theater assigns different tasks to each of the Interactors according to the definition of the script, for example, rendering media objects, reporting the user responses during different periods of time.

As mentioned, the Theater is a desired environment in which the Story will play. The target environments might be different, therefore there will be a mapping problem, that is, how to satisfy the abstract requirement to the users' physical environments.

Storylines, feed-forward and feedback components in a Story can be recognized as time-based media objects. A media object can be rather abstract, for example, expressions, behaviors, and even emotions, can be defined as media objects as long as they can be recognized and rendered by any Interactors.

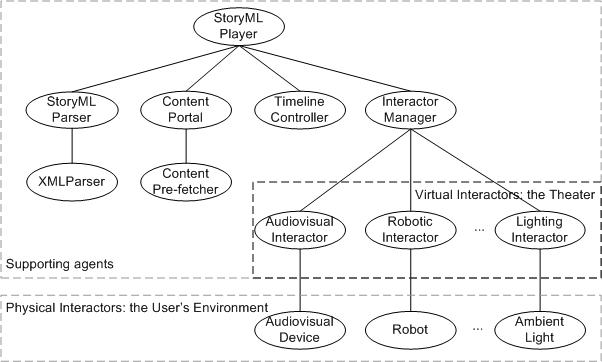

Figure 3 shows the hierarchical structure of the StoryML player. The content portal establishes the connection to content servers and provides the system with timed content. The content pre-fetcher overcomes the start latency by pre-fetching a certain amount of data and ensures that the media objects are prepared to start at specified moments. An XML parser first parses the StoryML document into Document Object Model (DOM) objects and then the StoryML parser translates the DOM objects into internal semantic representations. The Timeline controller acts as a timing engine that plays an important role in media and interaction synchronization.

The bottom level agents indicate different physical interactors. For each physical agent, there is an intermediate virtual interactor connected as its software counterpart. All virtual interactors are managed by an Interactor manager.

As the hierarchy shown in Figure 3, it is the responsibility of a virtual interactor to find out if in the user's environment there is a physical device available that can be a replacement. This is done by broadcasting a query to the network and every networked device will return a token together with a description of its capability. If such a device is found, the media presentation and interaction tasks are then transferred to the device identified by its token.

The virtual interactors maintain the connection with the physical ones by sending handshaking messages on a regular basis. Once no response is from the physical device, the virtual interactor will try to find another one in the environment. If failed to find any corresponding physical devices, the virtual interactor will present itself onto an audiovisual device in order to make the media presentation and the user interaction possible again.

Therefore the mapping from the desired Theater to the user's physical environment is done autonomously and dynamically by the virtual interactors without the upper layer manager intervention.

For demonstration purpose, the TOONS application is implemented on a PC, a robot and a light, which respectively serve as an audiovisual interactor, a robotic interactor and an ambient light interactor. All these components are implemented based on the Java technology. The PC provides services of the content portal, StoryML parser, timeline controller and interactor manager. The robot, named Tony, is assembled using the LEGO Mindstorms Robotics Invention System (RIS). TONY is powered by LeJOS, an embedded Java VM. The light is controlled via a Java virtual interactor running on the PC.

In the TOONS show these interactors are involved. Tony will be "woken up" at certain moment during the show. Tony is then able to react on some events happening in the show. The user will be invited to help the main character in the show to make decisions (for example to open the "left" or the " right" door) during a certain periods in time by pushing Tony to go left or right (see the sample code in the appendix). When the user decides the character to enter the dark room, the light will be off and Tony behaves scared. The user can then switch on the light to illuminate both the dark room in the reality and the dark room in the virtual world.

We presented a framework which allows the presentation of multimedia contents in different Environments. StoryML plays an important role in the framework: (1) StoryML allows the separation of the content from the concrete physical devices. (2) A StoryML document specifies abstract media objects at a high level and leaves the complexity to the implementation of the rendering interactors. (3) The StoryML player supports the automatic mapping of the same document to different environments with dynamic configurations.

The author is grateful to those who read and commented on the early drafts, and in particular to Maddy Janse and Young Peng.