This case study compares students’ learning experience and outcomes in

the lecture and online versions of a first-year introductory computing

course offered at the Department of Computer Science of the Hong Kong University

of Science and Technology. In spring 2000, 105 mostly first year biology

majors completed the lecture-based course. In fall 2000, the online version

of the course was offered to 180 mostly first year chemistry majors. The

study found no marked differences in overall student learning and satisfaction

across the two course versions. However, students of the online course

obtained lower results in conceptual learning, but perceived the course

as less difficult and slower-pace than the students of the lecture-based

course. These differences are interpreted in the context of the Hong Kong

educational system.

While online learning has been found successful and effective in the West (e.g., [1, 2, 3]), little is known of its suitability in the Chinese context. We report experiences from one of the first experiments on online learning in Hong Kong. We compare college students’ learning experience and outcomes in the lecture and online versions of a first-year “COMP101 Computing Fundamentals” course and seek answers to three questions.

First, do Chinese students achieve similar learning outcomes in the online and lecture-based courses, as it was previously found in the West?

Second, should students be assigned to the different courses based on their computing background?

Third, do students react similarly to the two versions of the course, as it was previously found in the West?

“COMP101 Computing Fundamentals” is a first year course targeted to non-engineering students, offered at the Hong Kong University of Science and Technology. The course objectives are to help students deepen their understanding of computers and computing, and to help students improve their practical computing skills.

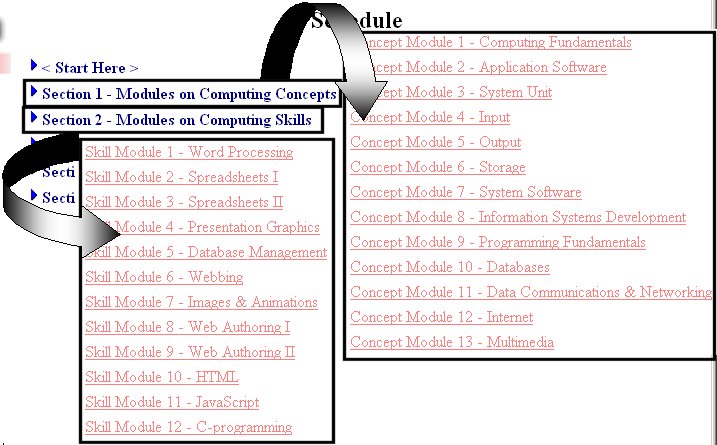

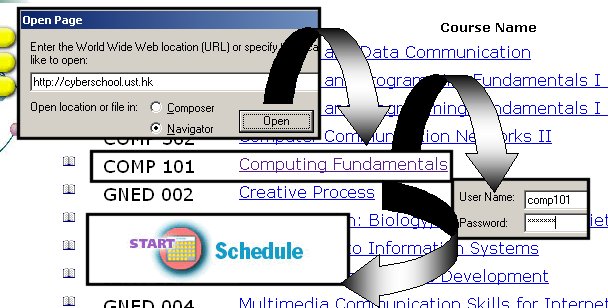

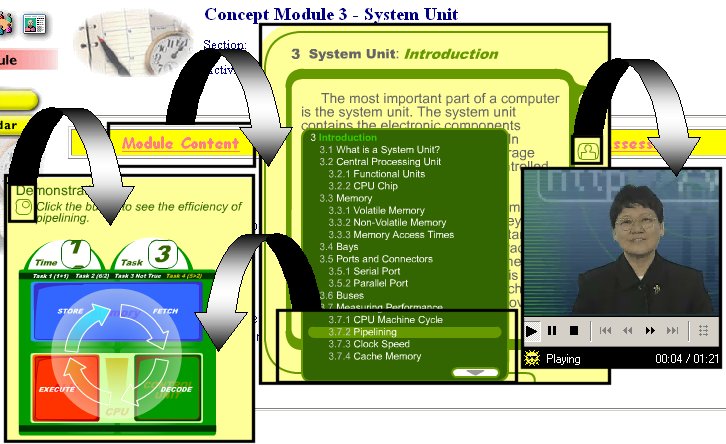

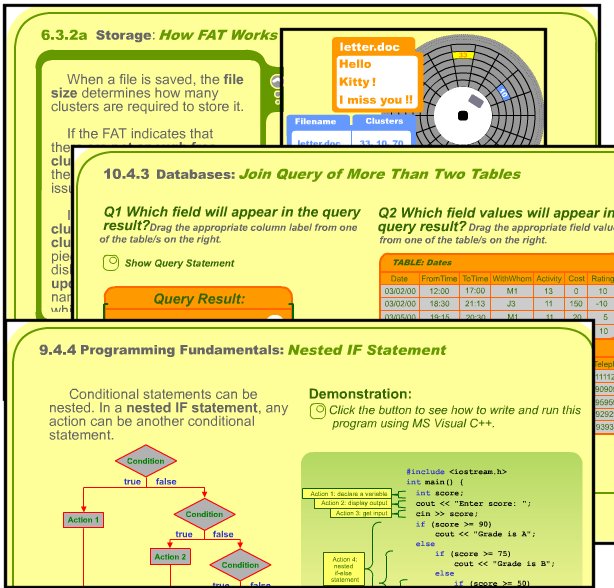

In the spring 2000, the course was offered in a lecture-based format where the Web was used as a support structure (http://www.cs.ust.hk/~moneta/comp101/). In the fall 2000 online version, with no traditional lectures, the Web presentation of the lecture materials was transformed to include multimedia elements such as audio, video, narrated and non-narrated animations, and procedural demonstrations, and interactive features such as online self-assessment questions and exercises.

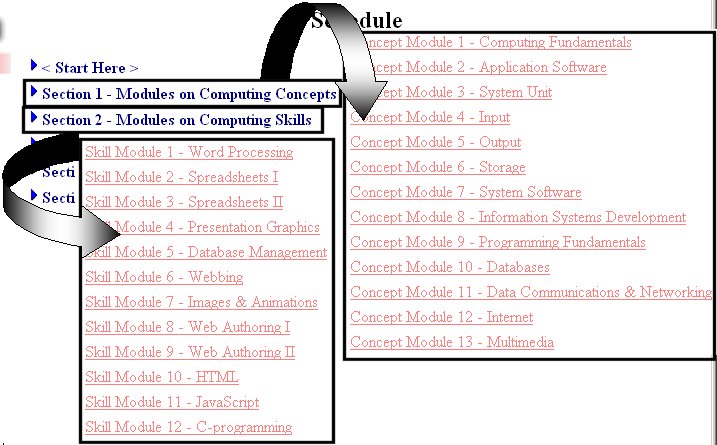

For accessing the online course, open the URL

http://cyberschool.ust.hk

and choose “COMP101 Computing Fundamentals”.

When requested, enter “comp101” as username and as password. The

course materials are accessible through the “START Schedule” button.

In both courses, the weekly small-group laboratory sessions were organized

in the same format, with a teaching assistant directing the learning activities.

Furthermore, the same instructor (the first author) was in charge of both

courses.

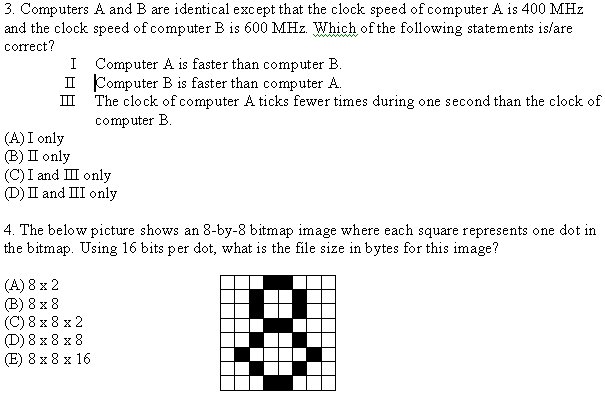

The midterm examinations of the two courses had 21 multiple-choice

questions in common. Seven questions tested students’ learning of factual

knowledge. The remaining 14 questions tested students’ conceptual learning;

three questions were further classified as testing the ability to apply

learned concepts.

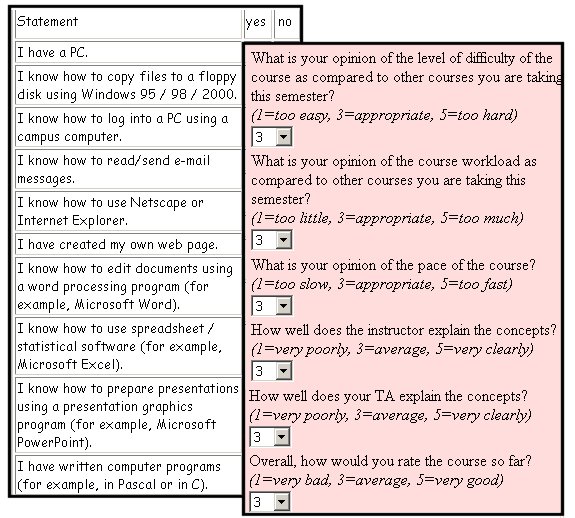

Students’ computing background was established with a 10-item checklist

(e.g., “I have created my own web page.”) that the students completed at

the beginning of the course.

During the fifth and sixth weeks of the semester, the students were asked to complete an anonymous online midterm course assessment form containing six scaled items.

The first research question was addressed using regression analysis. Table

1 reports the estimates of the four regression models of test scores on

course version and computing background. While there are no significant

differences between courses in overall and factual learning, the online

students appear to be inferior in conceptual and applied-conceptual learning.

With the exception of factual learning, computing background appears to

have a positive effect on performance.

All questions |

Factual questions |

Conceptual questions |

Applied conceptual questions |

||||||

Predictors |

Beta |

P< |

Beta |

P< |

Beta |

P< |

Beta |

P< |

|

Back-ground |

.23 |

.001 |

.11 |

.075 |

.24 |

.001 |

.17 |

.007 |

|

Course version |

-.09 |

.128 |

-.00 |

.946 |

-.12 |

.048 |

-.24 |

.001 |

|

For testing the second research question, we added the course-version by computing background interaction to the models of Table 1. The interaction was non-significant in all four models (results not shown).

Turning to the third research question, Table 2 summarizes the midterm

course evaluation data. The overall test of between-courses mean differences

obtained by MANOVA was highly significant (Wilks Lambda=.85, F=5.80, d.f.=6,

169, p<.001) indicating that, overall, the two courses received different

evaluations. The univariate F-tests were non-significant for Q5 (TA’s helpfulness)

and Q6 (overall satisfaction) and significant for the other questions.

Compared to the online students, the students of the lecture course perceived

the course as more difficult (Q1), involving a lighter workload (Q2), and

of faster pace (Q3), and the lecturer’s role as more helpful (Q4).

|

Lecture course |

Online course |

F |

d.f. |

P< |

|||

|

Q |

Mean |

SD |

Mean |

SD |

|||

|

1 |

3.07 |

.70 |

2.81 |

.76 |

4.66 |

1 |

.033 |

|

2 |

2.74 |

.71 |

3.20 |

.77 |

13.87 |

1 |

.001 |

|

3 |

3.35 |

.73 |

3.11 |

.65 |

4.90 |

1 |

.029 |

|

4 |

3.52 |

.86 |

3.23 |

.78 |

4.81 |

1 |

.031 |

|

5 |

3.72 |

.86 |

3.56 |

.91 |

1.27 |

1 |

.262 |

|

6 |

3.48 |

.91 |

3.39 |

.67 |

.61 |

1 |

.436 |

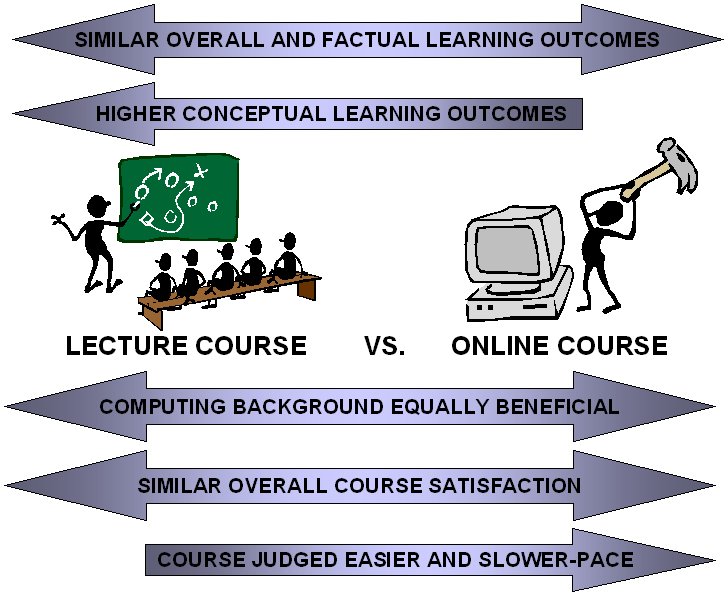

The learning outcome was similar in both courses when considering all common midterm questions or the subset of factual questions. Performance in the conceptual and applied conceptual questions was significantly predicted by attending the lecture course. Thus, the answer to the first research question is that the students of the online course obtained equal overall learning outcomes but lower results in conceptual and applied conceptual learning.

The online students’ poorer achievement in conceptual and applied conceptual learning may be related to the characterization of Hong Kong students as somewhat passive and non-exploratory learners [5] and to the fact that the Hong Kong education system seems to train students mostly to other-regulated, as opposed to self-regulated, learning [4]. The students of the online course were not able to get as much out of the course.

The effect of computing background was equally beneficial in both courses. Thus, there would be no advantage in pre-selecting students for the online course based on their prior computing knowledge.While students’ overall satisfaction of the courses did not differ, the online course was perceived as less difficult and slower-pace than the lecture course. These findings may again be indicators of the Hong Kong students’ problems with self-regulated learning. It is possible that, right because there was no lecturer constantly emphasizing the importance of the course contents and the need for studying, the online students underestimated the amount of effort they should have put in the course.

In our evaluation of students’ learning experience and outcomes, both overall satisfaction and performance did not differ across the lecture and online courses. These findings add to the Western body of evidence on the effectiveness of online learning, at least at the introductory undergraduate level.

The findings on the students’ factual and conceptual learning may be used as indicators in the development of online courses. First, the online students’ capacity to perform well on factual learning should encourage the development of undergraduate online courses as many such courses have an established factual content and involve a low degree of conceptual learning.

Second, the online students’ poorer performance in conceptual learning

indicates that, most likely, the online course fell short in capturing

the essence of the classroom teaching-learning interaction and in implementing

it in the interactive features. Addressing these shortcomings requires

a careful analysis of effective classroom activities and developing novel

methods for achieving equal effectiveness online.

[1] |

S.D. Johnson, S.R. Aragon, N. Shaik and N. Palma-Rivas. Comparative analysis of learner satisfaction and learning outcomes in online and face-to-face learning environments. Journal of Interactive Learning Research, 11(1):29-49. |

[2] |

R. LaRose, J. Gregg, and M. Eastin. Audiographic telecourses for the Web: an experiment. Journal of Computer-Mediated Communication [On-line], 4(2). http://www.ascusc.org/jcmc/vol4/issue2/larose.html. |

[3] |

P. Navarro and J. Shoemaker. Performance and perception of distance learners in cyberspace. The American Journal of Distance Education, 14(2):15-35. |

[4] |

F. Salili. Achievement motivation: a cross-cultural comparison of British and Chinese students. Educational Psychology, 16(3):271-279. |

[5] |

J.A. Spinks, L.M.O. Lam and G. Van Lingen. Cultural determinants of creativity: an implicit theory approach. In S. Dingli (Ed.), Creative Thinking: Towards Broader Horizons. University of Malta Press, 1998. |